[ARCHIVE] Serving BERT Model with Pytorch using TorchServe

Finally

So finally Pytorch is getting a decent (?) production serving capabilities. TorchServe was introduced a couple of days ago along with other interesting things

So TorchServce was announced as a “industrial-grade path to deploying PyTorch models for inference at scale”. In this tutorial we will try to load a finetuned BERT model.

Installing the requirements

2 things you need to get going is the torchserve and torch-model-archiver

pip install torchserve torch-model-archiver

Converting the model to MAR file

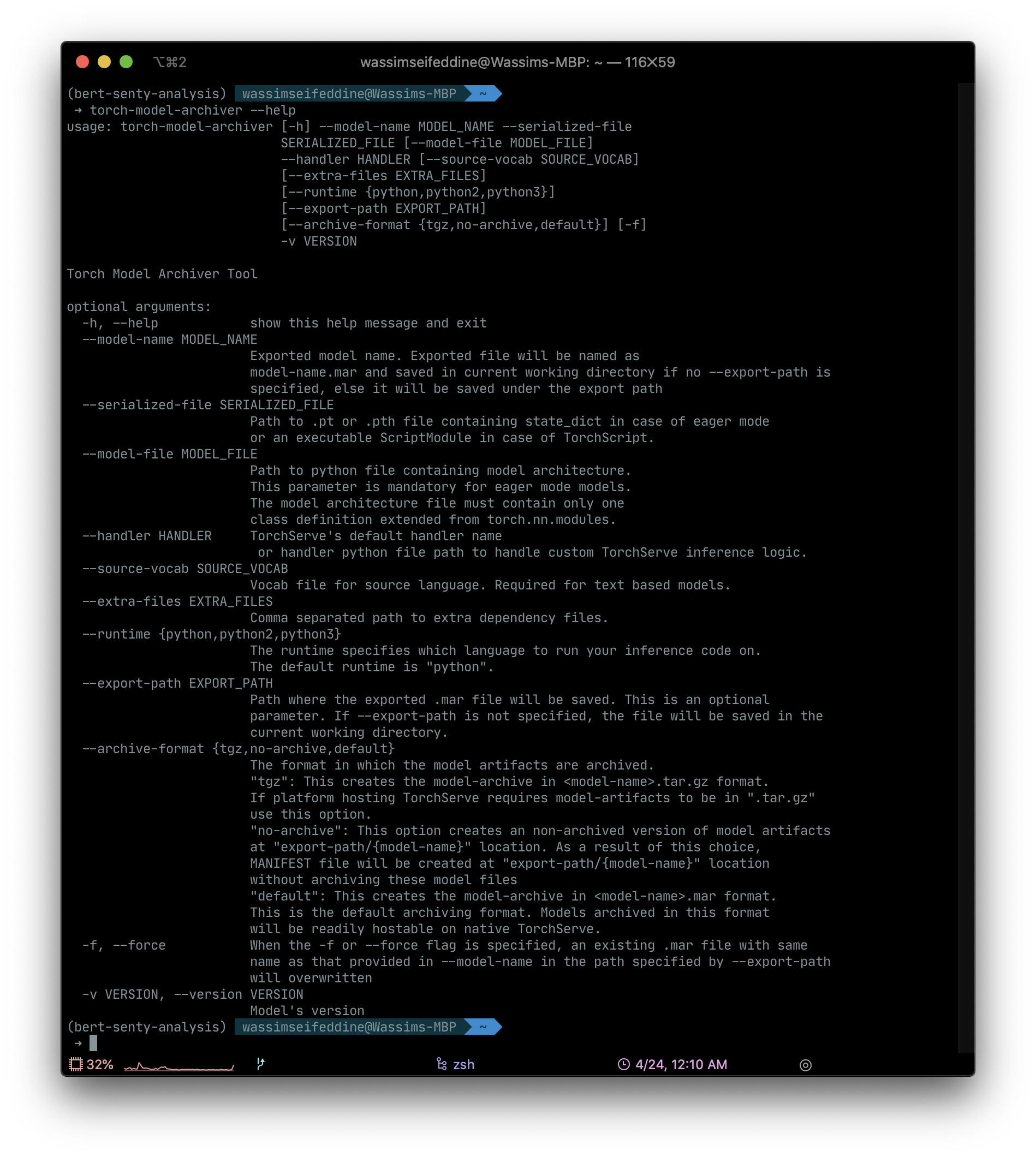

Before serving the model, you need to convert it to .mar file, for this step we are going to use the torch-model-archiver we just installed

Some parameter you should pay attention to are

- model-name : A name you want to specify to the model

- serialized-file: the model trained weights file

- model-file: the actual model definition file

- handler: Will cover it in the next section

- extra-files: Any extra files you can to add to your serving. will see how it’s useful

A sample command would be something like this

torch-model-archiver --model-name my-model-name --version 1.0 \

--model-file model.py --serialized-file ./model.bin \

--extra-files params.py --handler MyCustomHandler.py

The --extra-files should include every file that you are using in model.py. In my case they where the hyperparameter of my model

The output of this command would be a single my-model-name.mar file